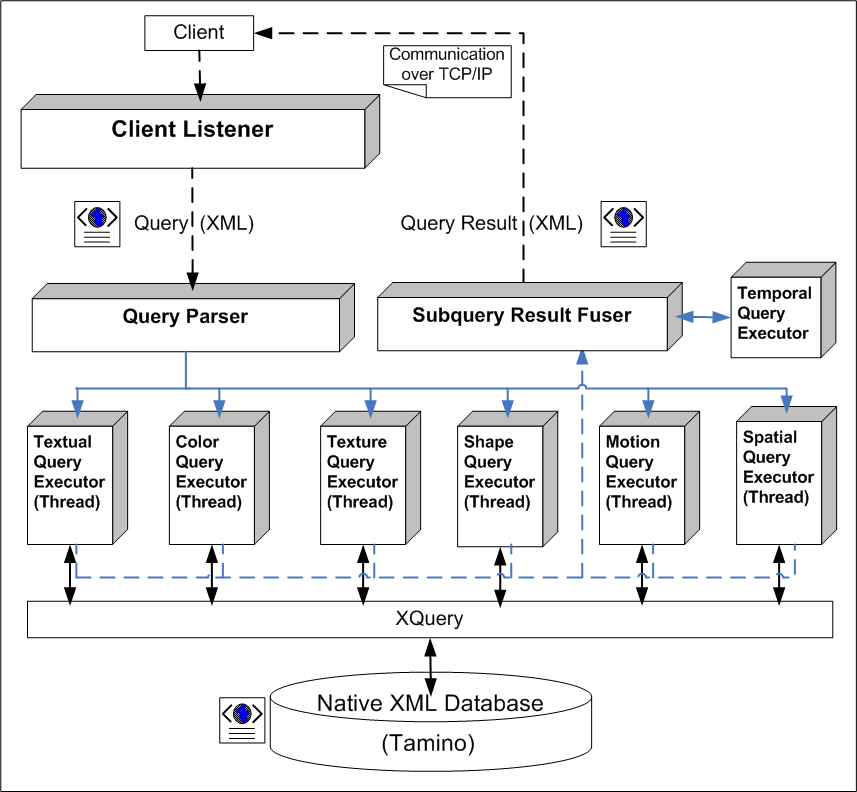

Each incoming query is parsed into subqueries and executed in a multi-threaded fashion, with one thread for each type of subquery,

as shown in Figure 1 below. Queries with the same subquery type are accumulated in a queue and executed on a first-in-first-out (FIFO) basis. For example, subqueries for color descriptors (CSD, SCD, DCD, etc.) are added to the end of queue of Color Query Executor thread and executed in this order.

One XQuery is formed and executed for each video segment (Shot, Keyframe, Still Region, Moving Region) and for each type of subquery.

The XML database returns the XQuery results (which are parsed to extract the actual data -- the descriptors) in XML format. Textual queries are the easiest to execute since the XML database can handle textual queries and no further processing is needed for the similarity computation.

However, the database cannot handle the similarity queries for low-level descriptors. That is, the distance (or similarity) between a query descriptor and a descriptor in the database cannot be computed by the database.

Therefore, the corresponding query execution thread retrieves the relevant descriptors from the database for the video segment in the subquery (e.g., Color Structure descriptors for Keyframes) and computes the distance between the descriptors of the query and the database.

For details please see here (Section 5.2).

The distance measures suggested by MPEG-7 authors for each descriptor are implemented in MPEG-7 XM Reference Software but they are not normative.

The evaluation of distance measures for a set of MPEG-7 descriptors, presented in [ 26 ], shows that although there are better distance measures (e.g., pattern difference, Meehl index), the recommended distance measures of MPEG-7 are among the best.

Therefore, we adapted the distance measures from the XM Reference Software implementation. In the future, other distance measures will also be investigated. For the details of the distance measures please see [ 1 ] and [ 27 ].

The similarity values must be normalized to [0,1] range since we use a weighted average of similarity values for the fusion of subquery results

(please see next section).

We do this, by normalizing the computed distance value with the maximum distance, which is computed by taking the maximum distance from a large set of descriptors extracted from video segments (Shots, Keyframes, Moving Regions, Still Regions).

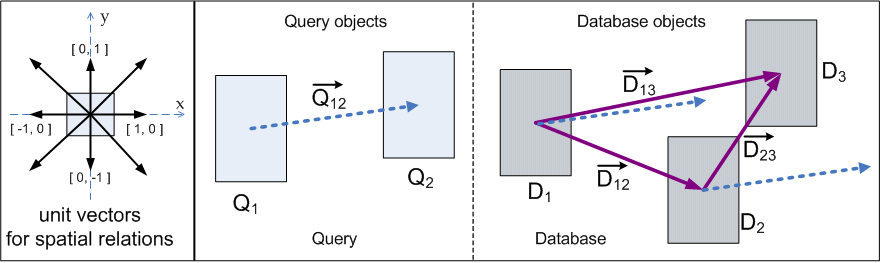

Spatial Query Processing. Spatial locations of Still Regions and Moving Regions are stored in database by their minimum bounding rectangles,

without any preprocessing to extract and store the spatial relations between the regions.

Therefore, spatial similarity between regions are computed at query execution time. For each Still Region or Moving Region in the query,

first, queries related to the properties of the region (textual, color, texture, shape, location, motion) are executed.

Then, resulting video segments undergo spatial query processing to compute the spatial similarities between them.

We use the spatial similarity matching approach described in [19] because of its efficiency and robustness.

First, the vectors connecting the center points of MBRs of objects are computed.

Then, the spatial similarity is computed by the cosine of the angle between the vectors in the query and database,

using vector dot product, shown in Figure 2 below.

The output value is in the range [-1, +1], with +1 indicating identical spatial relation, and -1 opposite spatial relation.

The text-based spatial queries are executed in the same way, by converting each spatial relation query to a unit vector.

For instance, A right B (A is to the right of B) query is converted to a query vector QAB(-1,0), from A to B.

Multiple MBRs are stored in the database for Moving Regions. The spatial similarity is computed for all the MBRs

and most similar one is taken as the final similarity.

For details please see here (Section 5.2).

Temporal queries, if any, are executed after spatial queries by checking if the list of video segments satisfy the temporal relations specified in the query. Spatial queries implicitly enforces a temporal relation between Still and Moving Regions, since they must coappear on the same scene for some time interval in the video to satisfy the spatial relations.

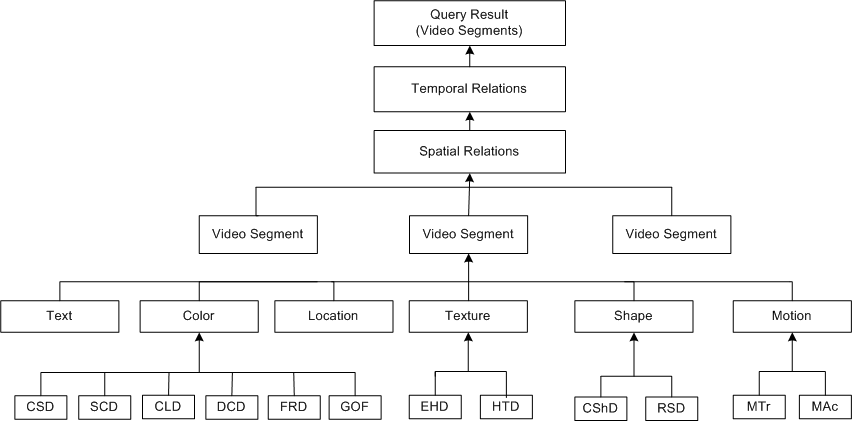

When multiple descriptors, possibly in different modalities, are specified for a query segment,

each is executed as a separate subquery returning a list of video segments with similarity values.

These subquery results must be fused together to come up with the final query results.

This is done in a bottom-up manner as shown in Figure 3.

Each node in the tree has an associated weight and threshold, which can be specified by the user during query specification.

The similarity at each node is computed as the weighted average of the similarities of its children and fusion process continues

upward in the tree until the final query result is obtained. If the similarity value is below the specified threshold, that video segment is discarded.

For details please see here (Section 5.3).