An Augmented Reality Environment for Interactive Crowd Simulation

Project Description

We propose a general purpose system for interactive and real-time crowd simulation within an Augmented Reality environment. Users will be able to interact with virtual objects and autonomous characters that are modeled with computer aided design tools. Behaviors of these objects and characters will be modeled and simulated in virtual domain with respect to a specified scenario. Information on the positions, directions and actions of the real users will be transferred to this virtual domain. This information will alter the behavior of the virtual models. Similarly, the states and actions of the computer controlled autonomous characters and virtual objects will be streamed to the Augmented Reality visualization devices of the users in real-time.

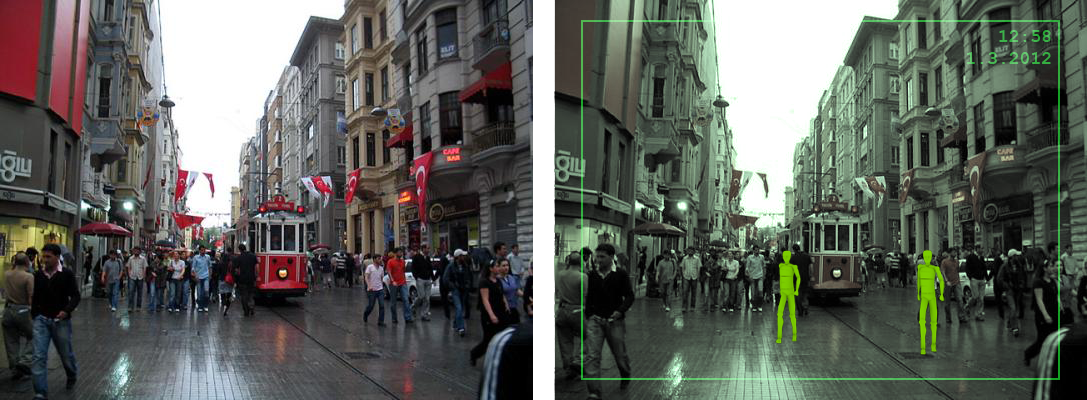

An example of an AR environment that can be achieved with this system is given in Figures 1 and 2. Two synthetic agents are simulated by the server in this example system. At each instant, the server updates the positions and behavior of these two synthetic agents to the connected AR client. Having received the up- dates, AR client software then registers and renders these agents on the attached HMD. device worn by the real user.

A framework will be developed to define simulation scenarios (specifically for crowd simulation). Some example scenarios include urban warfare simulation for armed forces; training simulations for police and gendarme forces in order to manage crowds in mass demonstrations; or training applications to manage emergency situations like fire. Scenarios can also be defined to satisfy needs of training in many fields of formal education. An example scenario is provided in Figure 3.

Compared to the existing approaches, Augmented Reality has many advantages in terms of presenting and reinforcing knowledge within a digital environment. Users are not restricted to work on stationary computers. Up to date hardware for Augmented Reality systems are portable and can be carried with ease. It enables users to communicate face to face and also collaborate to realize goals of the scenario during a simulation. In Augmented Reality applications synthetic images are merged with real images. Thus, Augmented Reality reinforces learning through physical experience in the users’ natural environment. Users interact with the environment through common body motion. Hence perpetual usage of Augmented Reality does not have a negative effect on health due to inactivity.

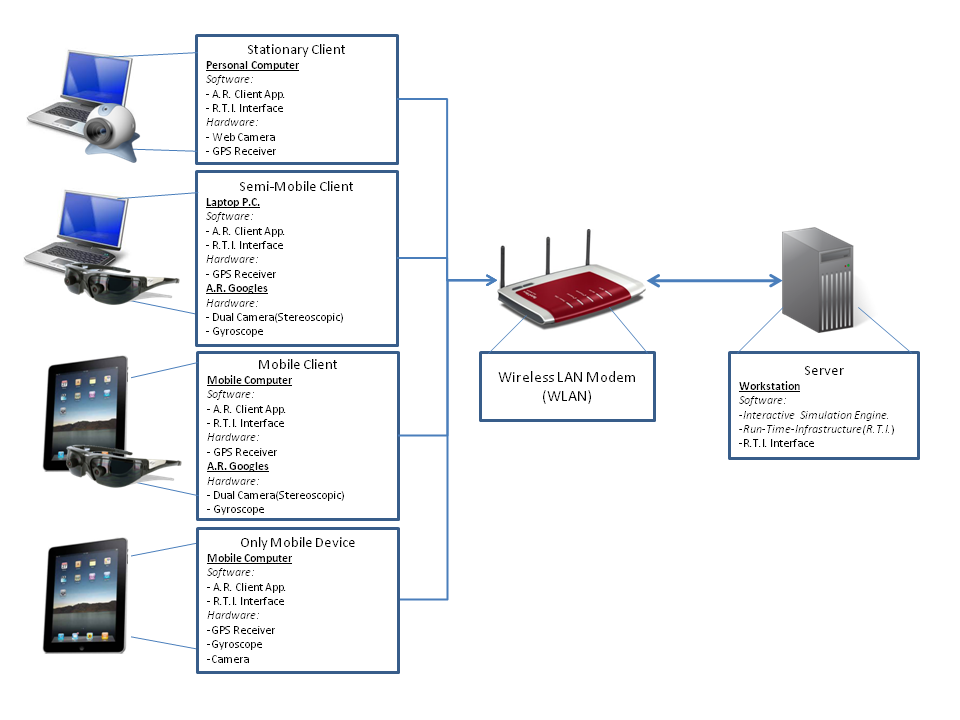

The project will make use of stereoscopic head mounted display (HMD) devices with integrated cameras and Augmented Reality support. Three dimensional virtual objects will be rendered and laid on top of the real image sequences obtained by the HMD devices. The system will enable users to interact with other users, virtual objects and autonomous characters. The positions, velocities of the real users and the view frustums of their head mounted display devices (HMDs) will be tracked by the global positioning system (GPS), Gyroscopes and Accelerometers. This information will then be transferred to the simulation domain and will be processed. The physical components of the system involving HMD and other AR devices(and device combinations) are presented in Figure 4.

Static entities within the real space (like walls, building, etc.) will be modeled and simulated as well. Therefore actions of the autonomous agents and other virtual objects will be affected by these static entities.