Research

Path-planning for UAVs with Deep Reinforcement Learning

Path-planning has been an active research area recently. While deep reinforcement learning (RL) has been applied on various path-planning applications, their usage in UAVs has been limited. In this research focus, we look for various ways to improve the the performance of DRL for path planning in UAVs while looking for better architecture to eliminate the need for GPS communication.

Visual Object Tracking with Deep Reinforcement Learning

In this project, we design and develop deep reinforcement learning (RL) based algorithms to track objects in videos. Our 2020 ICPR paper: "Visual Object Tracking in Drone Images with Deep Reinforcement Learning" studies different aspects of a deep RL based tracker to track an object of interest visually in drone images.

This sample video compares our deep RL based visual object tracker's result (shown in red bounding box) to the result of a recent state of the art RL based tracker (shown in blue). The ground truth bounding box is shown in green. The results are seen best in full screen. For further details, please check our ICPR 2020 paper.

This sample video compares our deep RL based visual object tracker's result (shown in red bounding box) to the result of a recent state of the art RL based tracker (shown in blue). The ground truth bounding box is shown in green. The results are seen best in full screen. For further details, please check our ICPR 2020 paper.

Similarity Domains Machine for Shape Analysis

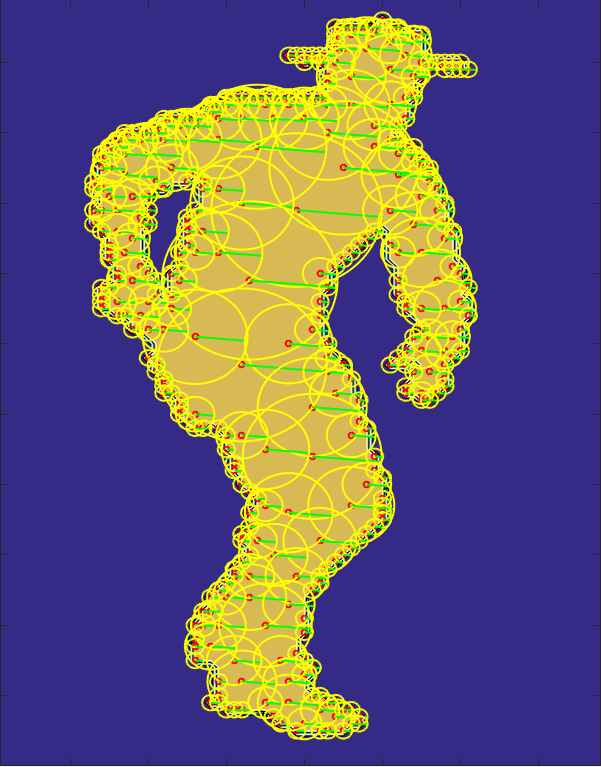

This is a research efford that I persue to obtain interpretable machine learning algorithms. In particular, my algorithm: Similarity Domains Machine (SDM) is capable of not only interpreting its computed kernel parameters, but also capable of using those interpretations to modify the classifier in various ways with linear and explainable operations without re-training (with operations such as filtering, scaling, shifting, etc.). SDM allows data analysis with its interpretable parameters. In particular, SDM can be used to represent shapes with its geometrically meaningful kernel parameters as demonstrated in my 2018 IEEE, Transactions on Image Processing paper.

This is a research efford that I persue to obtain interpretable machine learning algorithms. In particular, my algorithm: Similarity Domains Machine (SDM) is capable of not only interpreting its computed kernel parameters, but also capable of using those interpretations to modify the classifier in various ways with linear and explainable operations without re-training (with operations such as filtering, scaling, shifting, etc.). SDM allows data analysis with its interpretable parameters. In particular, SDM can be used to represent shapes with its geometrically meaningful kernel parameters as demonstrated in my 2018 IEEE, Transactions on Image Processing paper.SDMs can also be interpreted as neural networks and can be used for efficient skeleton extraction. I explained how they can be used for skeleton extraction with the interpretable parameters of a neural network in my CVPR 2019 workshop paper.

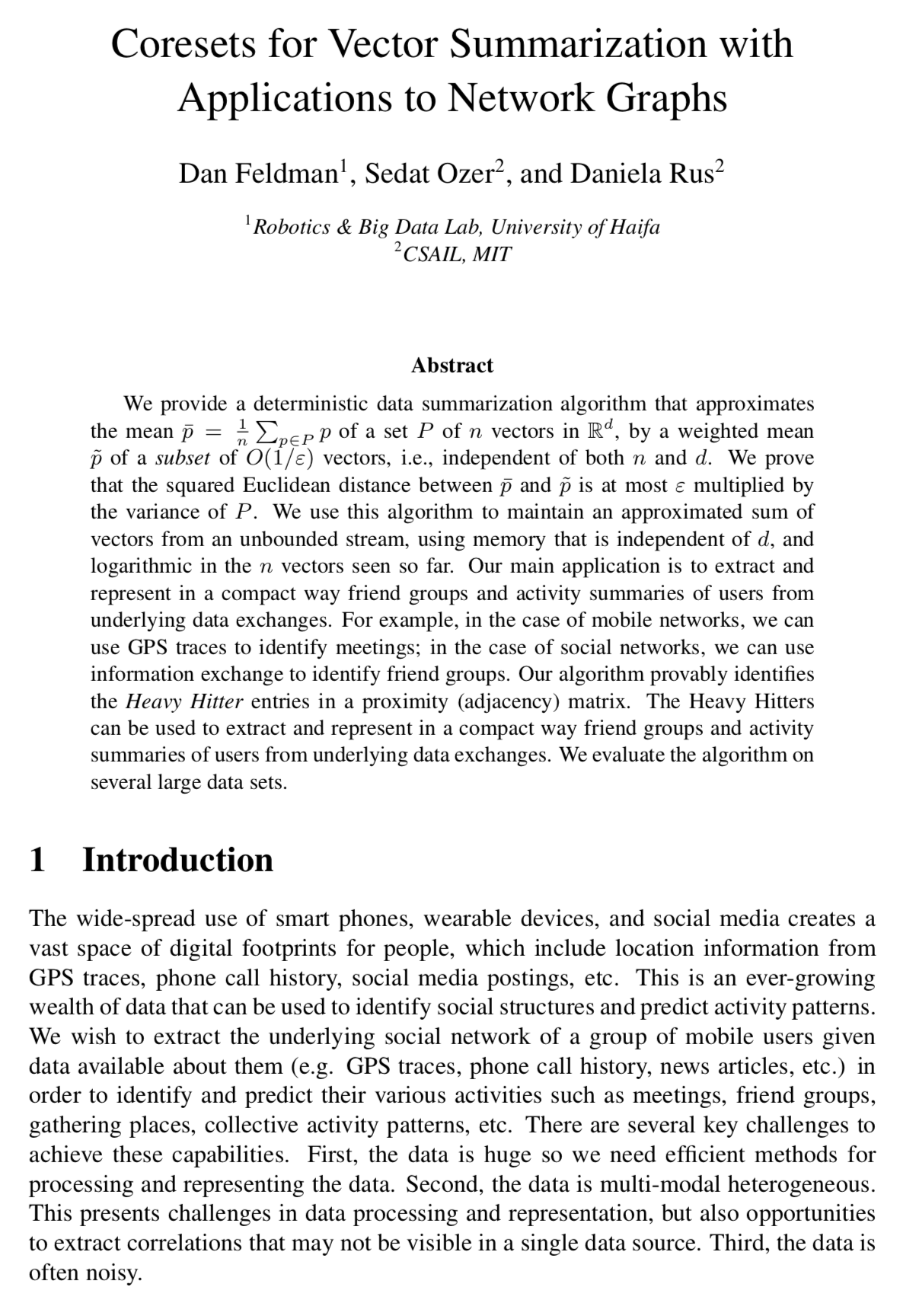

Activity Detection in Location-based Datasets with Coresets

In this project, we developed new techniques to process large location-based datasets for the detection of various types of activities. In particular, we developed and applied coreset-based techniques to summarize and speed up the computation while maintaining an approximate accuracy of dynamic social networks from streaming GPS-based location datasets with bounded errors.

In this project, we developed new techniques to process large location-based datasets for the detection of various types of activities. In particular, we developed and applied coreset-based techniques to summarize and speed up the computation while maintaining an approximate accuracy of dynamic social networks from streaming GPS-based location datasets with bounded errors.Our ICML 2017 paper won NVIDIA's Pioneering Research award.

Check Nvidia's blog to read more about NVIDIA's award.

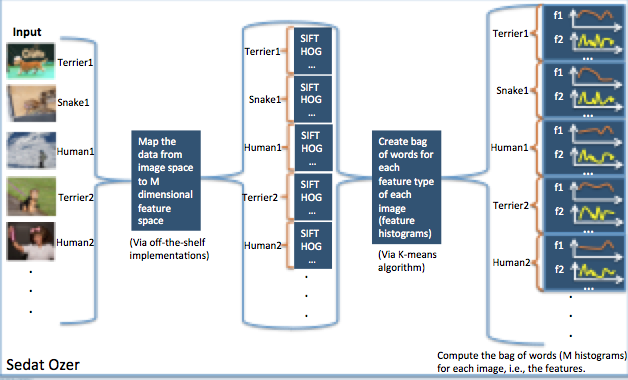

Visual Object Recognition in Large Datasets

If you ever used Google's image search engine by uploading an image, you may have noticed that, while it works quiet impressive, it is still far from being perfect (or near perfect). The fundamental problem in such image based search systems is defining and describing the most "similar image". A succesful image based search system would first analyze the contents of the given input image, and then lists the images including the most similar content among all the available images in a given database (such as Google's image database).

If you ever used Google's image search engine by uploading an image, you may have noticed that, while it works quiet impressive, it is still far from being perfect (or near perfect). The fundamental problem in such image based search systems is defining and describing the most "similar image". A succesful image based search system would first analyze the contents of the given input image, and then lists the images including the most similar content among all the available images in a given database (such as Google's image database). In this project, I developed various steps of such an image retrieval system. These steps included image analysis, feature computation, feature selection and classification. Check our related papers: IEEE Asilomar paper or my book chapter in Computer Vision Handbook.

Activity Detection in Scientific Visualization

In this project we look for a way to "extract" an "activity" in time varying scientific data sets. As an example, consider the videos shown on the left. The data set contains a Computational Fluid Dynamics (CFD) simulation formed of 100 time steps. The variable being visualized is the vorticity magnitude. The first video visualizes the evolution of all the extracted vortices over the time.

Now in this data set, assume that you are not interested in seeing all the vortices, and instead, you are looking for only the vortices performing a specific activity. Consider that activity of interest is the "merge-split" activity in which individual vortices merge first, and then this new "combined feature" splits again within the next 5 time steps. Searching manually for all the instances (or maybe even for a single instance) of this activity is a hard task even in this small data set. An automated process would extract all the instances of the activity easily. Therefore, in this project, we look for a way to describe, model an activity first, and then extract all the instances of such activity within the data set. The second video highlights the instances of the "merge-split" activity found in the data set using our technique.

Now in this data set, assume that you are not interested in seeing all the vortices, and instead, you are looking for only the vortices performing a specific activity. Consider that activity of interest is the "merge-split" activity in which individual vortices merge first, and then this new "combined feature" splits again within the next 5 time steps. Searching manually for all the instances (or maybe even for a single instance) of this activity is a hard task even in this small data set. An automated process would extract all the instances of the activity easily. Therefore, in this project, we look for a way to describe, model an activity first, and then extract all the instances of such activity within the data set. The second video highlights the instances of the "merge-split" activity found in the data set using our technique.

For more information on this project, check my Ph.D. dissertation or our IEEE TVCG paper: IEEE TVCG paper. (You can also check this old SciViz page that I had prepared sometime ago.)

Group Tracking in Scientific Visualization

Similar to the computer vision field where the purpose is mainly analizing the video data of humans, there are groups in scientific data sets. A group is fundamentally a set of objects that are related "somehow". This relation can be only logical (such as a group of all green objects) or also physical (such as a flock of birds, a school of fish or a set of stars,i.e. a galaxy). The definition of a group changes from domain to domain, and this makes it harder to find a generic framework that could help scientists to define, extract and follow the evolution of groups in their data sets. Moreover, as the data dimensions increase, it becomes more and more apparent that smarter and more meaningful abstractions are necessary in large data visualization.

In this work, to help with the above-mentioned problems, we propose using similarity functions to define a (physical) group in scientific visualization. Similarity functions can map the physical group definitions in scientific domains into the computational domain. Once a similarity function is set (defined), a clustering algorithm can be used to extract the groups in scientific simulations based on the similarity function. When groups are determined, it is necessary to track them to understand their evolution over time (in time varying data sets). Therefore in this study, we proposed a group tracking schema in which we track groups of objects (features) as well as the invidual objects. Group tracking allows us to define and detect new type of events such as cross-group, partial or full merge and partial or full split.(See the video above illustrating those events.)

A recent version of this code is available through Vizlab, Rutgers or through me. Here is the IEEE LDAV paper summarizing that work.

In this work, to help with the above-mentioned problems, we propose using similarity functions to define a (physical) group in scientific visualization. Similarity functions can map the physical group definitions in scientific domains into the computational domain. Once a similarity function is set (defined), a clustering algorithm can be used to extract the groups in scientific simulations based on the similarity function. When groups are determined, it is necessary to track them to understand their evolution over time (in time varying data sets). Therefore in this study, we proposed a group tracking schema in which we track groups of objects (features) as well as the invidual objects. Group tracking allows us to define and detect new type of events such as cross-group, partial or full merge and partial or full split.(See the video above illustrating those events.)

A recent version of this code is available through Vizlab, Rutgers or through me. Here is the IEEE LDAV paper summarizing that work.

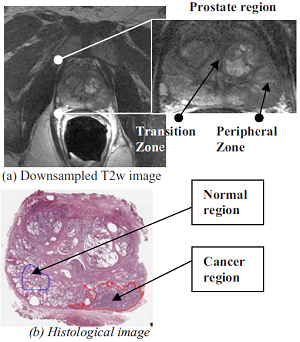

Prostate Cancer Segmentation in MultiSpectral-MRI data

In this project we (along with our collaborators from University of Toronto) worked on using various data types that comes from different imaging modalities to detect prostate cancer and checked if combining the information from such different modalities would increase the accurracy of the segmentation of the prostate cancer in automated methods. Our results indicated that, indeed using more than one modality in MRI data, increases the accuracy of prostate cancer segmentation with automated tools.

In this project we (along with our collaborators from University of Toronto) worked on using various data types that comes from different imaging modalities to detect prostate cancer and checked if combining the information from such different modalities would increase the accurracy of the segmentation of the prostate cancer in automated methods. Our results indicated that, indeed using more than one modality in MRI data, increases the accuracy of prostate cancer segmentation with automated tools.In this work, we showed that supervised techniques can work better than unsupervised techniques for prostate cancer segmentation. We discussed that using the traditional thresholding schema is not always the best solution for classifier based prostate cancer segmentation techniques. Therefore, we proposed various techniques for the training part to find "a better" threshold value that increases the segmentation efficiency for a given criteria such as the best accuracy, the best dice measure, the best sensitivity value, etc.

In this work, we used Support Vector Machines, Relevance Vector Machines (Sparse Bayesian Learning) and Markov Random Fields to segment prostate cancer. We compared the results by computing the area under the curve (AUC) values for each classifier. In this work we demonstrated that using AUC values are more reliable when comparing the performances of different classifiers as opposed to just comparing the standard outputs since changing the threshold of a classifier is relatively a simple task.

Read more about this project in our paper (published at the prestigious Medical Physics Journal) or in our IEEE ISBI paper.

Generalized Chebyshev Kernels with Generalized Chebyshev Functions

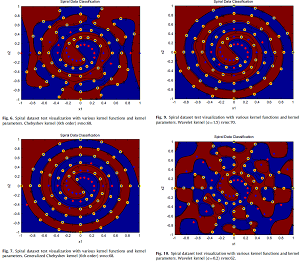

In this work, we presented a way to increase the accuracy of the previously presented Chebshev kernels. In order to do that, we first presented a way to apply Chebyshev polynomials on vector inputs by introducing "Generalized Chebyshev Polynomials". And then, with examples, we demonstrated that how the proposed generalized Chebyshev polynomials (of both first and second kinds) can be used to create a set of kernel functions. Moreover, in this work we studied the SVM performance vs. kernel parameter and found out that for some kernels the performance can be changed drastically with the kernel parameter when compared to the other kernel functions.

In this work, we presented a way to increase the accuracy of the previously presented Chebshev kernels. In order to do that, we first presented a way to apply Chebyshev polynomials on vector inputs by introducing "Generalized Chebyshev Polynomials". And then, with examples, we demonstrated that how the proposed generalized Chebyshev polynomials (of both first and second kinds) can be used to create a set of kernel functions. Moreover, in this work we studied the SVM performance vs. kernel parameter and found out that for some kernels the performance can be changed drastically with the kernel parameter when compared to the other kernel functions.In this work, we also discussed some properties of generic kernel functions where we claimed that first applying the kernel functions on individual features (in a vector) and then multiplying the results to obtain the final result for a pair of given two input vectors, may not be a good approach to use in SVM learning in general (see the figure on the left and our Pattern Recognition paper for further details).

As a summary in this work, we presented approaches, techniques and examples to generate new kernel functions using Chebyshev polynomials. And discussed that SVM with Gaussian kernels can be considered as a clustering algorithm that focuses on the edges between two given classes.

Since I have received recent requests, I posted some MATLAB files related to the Chebyshev polynomials and Chebyshev kernels below. There are two .m files that does the same work as a Chebyshev kernel below (gencheb.m and chebkernel.m files). I wrote those files quickly for those who would like to study Chebyshev kernels (and they both are expected to provide the same result). However, please keep in mind that none is (computationally) the optimal code. And again, as described on my Pattern Recognition paper, please remember that, especially for the generalized Chebyshev kernel, you might need a normalization step prior to using the kernel functions. Check the paper for further details. Each file has its own description within itself. Use them at your own risk, no warranty is provided! :)

FILES: